Usability evaluation methods for hospital information systems: a systematic review

Study selection

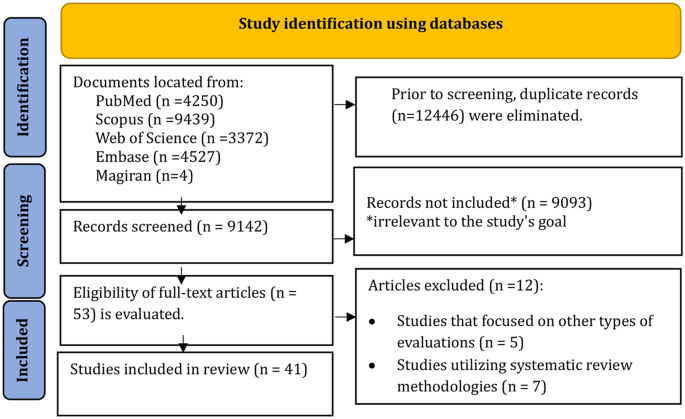

Out of an initial pool of 21,592 articles, a total of 41 studies were selected for final analysis following the screening process. The findings revealed that UT (34%) and HE (34%) were the most commonly employed usability assessment methods for HIS. The primary evaluation tools consisted of standardized checklists (36%) and questionnaires (40%), both of which predominantly focused on assessing key usability components, including user satisfaction (45%), efficiency (45%), and error reduction (40%).

The analyzed HIS subsystems encompassed NIS, LIS, CPOE, and other related subsystems, with different studies employing varied evaluation tools and methodologies tailored to each specific subsystem. Figure 1 presents the study selection process and search flowchart.

Flowchart of the study selection

Quality assessment

All included studies received a quality assessment score above 9, indicating a high level of methodological rigor. No studies were excluded based on quality assessment criteria (Appendix 2).

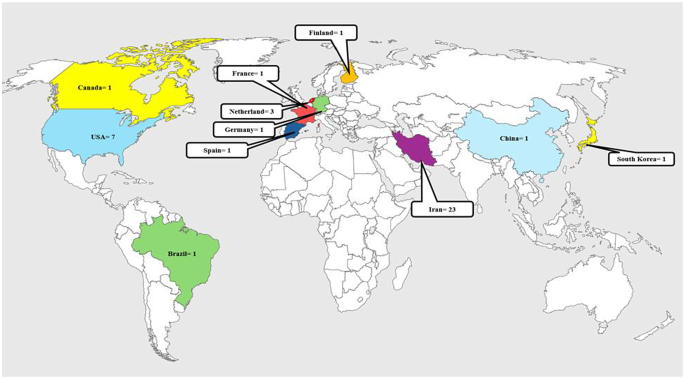

Geographical distribution of included studies

Among the 41 included studies, most were conducted in Iran (n = 23, 56%), followed by the United States (n = 7, 17%) and the Netherlands (n = 3, 7%). Other countries—including France, Germany, Canada, Finland, Brazil, South Korea, China, and Spain—each contributed one study (2%). Overall, 56% of studies originated from developing countries, indicating a notable research interest in HIS usability in resource-limited settings, alongside continued activity in technologically advanced nations. This geographical pattern reflects both global engagement and regional disparities in HIS usability research (Fig. 2).

Illustrates the geographical distribution of the included studies

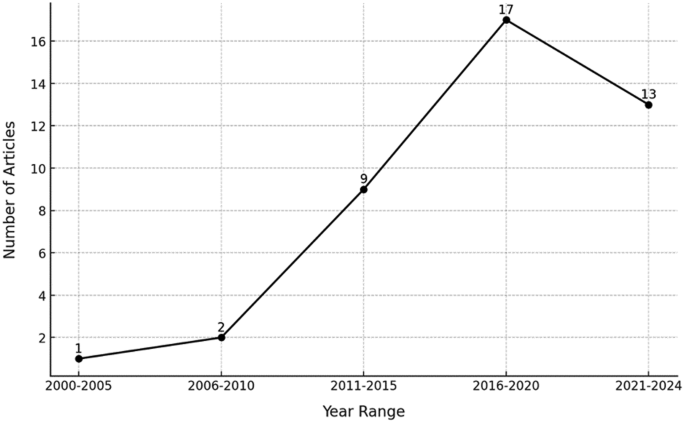

Temporal trends in published studies

Temporal trends in published studies

Temporal analysis revealed a steady increase in HIS usability research over the past two decades. Initial activity was limited, with only 3% of studies published between 2000–2005 and 5% between 2006–2010. A more substantial rise occurred from 2011–2015 (20%), followed by a peak between 2016–2020 (41%), coinciding with widespread HIS adoption and heightened awareness of usability challenges. From 2021 to early 2024, research remained active (32%), with growing emphasis on innovative solutions to usability problems. These trends reflect a sustained and evolving interest in HIS usability evaluation (Fig. 3).

Hospital information system subsystems

Before presenting the results per HIS subsystem, it is important to contextualize the evaluation methods and tools employed in the reviewed studies. The most commonly used evaluation methods included HE (34%), UT (34%), TA (15%), and CW (5%). Evaluation tools varied, with standardized checklists—particularly Nielsen’s heuristics—being the most prevalent (36%), followed by structured questionnaires (40%) such as the System Usability Scale (SUS) and the Post-Study System Usability Questionnaire (PSSUQ). These tools were selected based on their ability to measure core usability criteria such as efficiency, satisfaction, error prevention, learnability, and system flexibility. The combination of qualitative and quantitative approaches across studies enabled comprehensive assessment of real-world usability in diverse HIS subsystems.

Nursing information system (NIS)

A total of 8 studies (20%) focused on NIS usability evaluation, highlighting key challenges related to efficiency, effectiveness, and user error reduction. The findings suggest that a combination of user-based methods such as think-aloud protocols and standardized questionnaires, alongside expert-based approaches like HE, is the most effective strategy for assessing NIS usability. This integrated approach enables a comprehensive identification of usability issues, facilitating system redesign and enhancing user experience [8, 20,21,22,23,24,25,26].

Laboratory information system (LIS)

Three studies (7%) examined LIS usability, emphasizing its role in workflow optimization and user interaction enhancement [12, 27, 28]. The evidence suggests that direct user observation, standardized questionnaires, and HE together provide the most effective usability assessment. This approach helps identify practical challenges, informs system redesign, and contributes to improving efficiency and user satisfaction.

Computerized physician order entry system (CPOE)

Five studies (12%) explored CPOE usability, confirming its effectiveness in reducing medication errors and improving system efficiency [26, 29,30,31,32]. The recommended evaluation approach includes HE, UT, and standardized tools such as the System Usability Scale (SUS) and usability questionnaires. This combined methodology enhances system efficiency, minimizes prescribing errors, and improves user satisfaction.

Radiology information system (RIS)

Four studies (10%) focused on RIS usability, primarily examining its impact on reducing image data processing time and increasing user satisfaction [12, 33,34,35]. The most effective usability evaluation approach combines UT, HE, SUS, and standardized questionnaires. These methods help streamline image processing, optimize workflow efficiency, and enhance overall system usability.

Admission, discharge, and transfer system (ADT)

Five studies (12%) assessed ADT usability, indicating that usability evaluation tools mainly focused on effectiveness, efficiency, and user satisfaction [24, 36,37,38,39]. The integration of UT, HE, and standardized usability tools (SUS, questionnaires) is recommended for comprehensive usability assessment. This approach allows for precise problem identification, improved system efficiency, and enhanced user experience.

Pharmacy information system (PIS)

Only one study (2%) evaluated PIS usability [40]. The recommended approach includes UT combined with qualitative tools, such as satisfaction questionnaires and user feedback analysis. This methodology contributes to system design optimization and enhanced user experience.

Emergency information system (EIS)

Three studies (7%) focused on EIS usability [7, 41, 42]. The most effective approach integrates UT to identify performance issues, combined with HE to analyze interface design factors. These methods support system designers in better understanding user needs, leading to improved usability and user-centered design enhancements.

Picture archiving and communication system (PACS)

Two studies (5%) examined PACS usability [17, 43]. The preferred evaluation method involves UT to identify operational issues, combined with task analysis to understand complex workflow requirements. These approaches facilitate the development of more intuitive PACS systems, ensuring improved usability and enhanced user experience.

Clinical decision support system (CDSS)

Four studies (10%) focused on CDSS usability assessment [31, 44,45,46]. The most effective evaluation approach includes HE for identifying usability issues in early design stages, complemented by UT to assess real-world user interactions. These methods aid in improving system design and ensuring more effective decision support for healthcare professionals.

Electronic patient records (EPR, EHR, EMR)

Eight studies (17%) investigated EPR, EHR, and EMR usability [8, 20, 47,48,49,50,51,52]. Findings emphasize the importance of user-centered design and system usability improvements. The best approach integrates both qualitative and quantitative usability assessment methods, including user feedback surveys and task analysis, to evaluate real-world workflow efficiency. This methodology ensures optimal usability enhancements, improving system accessibility and user satisfaction.

To facilitate cross-subsystem comparison, Table 1 summarizes the distribution of evaluation methods, tools, and primary usability criteria used across the main HIS subsystems. This comparative overview supports a clearer understanding of methodological trends [7, 8, 10, 12, 17, 20, 22,23,24,25, 27,28,29,30,31,32,33,34, 36,37,38,39,40,41,42,43,44,45,46,47,48,49, 51, 53,54,55,56,57,58,59,60]. A comparative analysis revealed that HE and UT were consistently applied across most subsystems, particularly in NIS, CPOE, and RIS. However, their use varied based on system complexity and context. For example, task-intensive systems like CPOE and ADT often employed structured UT to capture workflow-specific usability challenges, while more modular systems like PACS or CDSS favored HE during early design stages. Likewise, standardized tools such as SUS and PSSUQ were predominantly used in EPR and CDSS studies to capture user satisfaction and interface efficiency. This variation highlights the need to align evaluation strategies with subsystem characteristics and user interaction patterns.

This summary table highlights methodological consistency and divergence across HIS subsystems. While certain evaluation strategies such as heuristic evaluation and SUS are broadly applied, others—like task analysis or cognitive walkthroughs—are selectively used based on subsystem characteristics. The inclusion of both quantitative and qualitative tools further illustrates the multidimensional nature of usability assessment in hospital settings.

Usability assessment tools used in HISs

Checklists

Checklists were employed in 18 studies (40%), with the Nielsen usability checklist being the most commonly used, appearing in 16 studies (36%) [8, 12, 17, 21, 22, 24,25,26,27, 31, 33, 34, 37, 47, 53, 60]. Additionally, a custom-designed checklist was used in one study (2%) [61].

Questionnaires

A total of 18 studies (40%) utilized questionnaires as a usability evaluation tool. While some studies relied solely on questionnaires, others combined them with interviews and direct observations to enhance the depth of usability assessments [7, 8, 10, 23, 25, 39,40,41, 43, 46, 48, 49, 54, 56,57,58, 62, 63].

Interviews and observations

Interviews and direct observations were employed in 6 studies (14%). These studies often incorporated questionnaires alongside interviews or combined interviews with observational methods to gather more comprehensive usability insights [17, 26, 29, 30, 32, 50].

System usability scale (SUS)

The SUS was used in 5 studies (12%), highlighting its role as a standardized tool for assessing the overall usability of HIS [7, 28, 42, 44, 56].

Overall, checklists and standardized questionnaires were the most frequently used usability evaluation tools in HIS research, indicating their broad applicability in structured and systematic usability assessments.

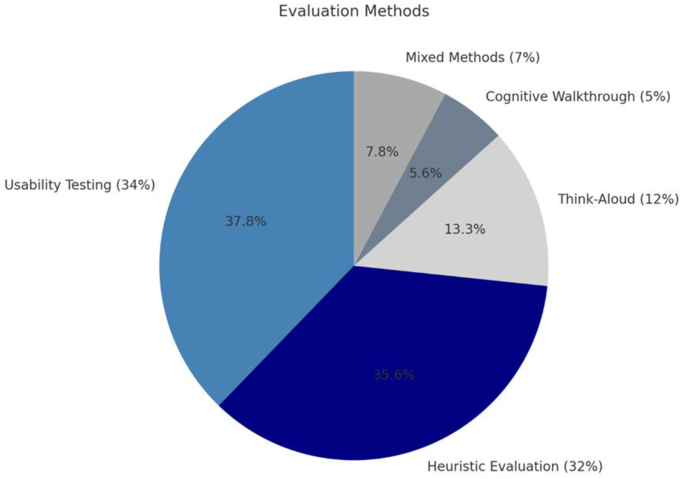

Usability evaluation methods in HIS

The findings revealed a wide range of usability evaluation methods, each serving a distinct role in assessing different aspects of system usability. These methods included HE, UT, TA protocols, and CW. Some studies implemented a combination of multiple methods to achieve a more comprehensive usability analysis. Figure 4 illustrates the distribution of usability evaluation methods applied in HIS-related studies.

Distribution of usability evaluation methods used in studies related to hospital information systems

Usability testing (UT)

The most frequently used method, applied in 14 studies (34%), was UT, which focuses on real-world user interactions with the system. This method is particularly valuable for evaluating system performance in practical environments, allowing researchers to analyze actual user experiences and system functionality [8, 17, 20, 23, 28,29,30, 39, 41, 44, 46, 54, 56, 58].

Heuristic evaluation (HE)

The second most commonly used method, HE, was applied in 14 studies (34%). This method relies on predefined usability principles to identify usability issues and is predominantly used in early-stage system development to refine design components [12, 24, 25, 27, 30,31,32,33,34, 37, 47, 49, 60, 64].

Think-aloud protocol

Used in 6 studies (15%), the think-aloud method focuses on analyzing users’ cognitive processes while interacting with the system. This approach enables researchers to uncover deeper usability challenges and gain insights into decision-making processes [17, 38, 40, 42, 45, 65].

Cognitive walkthrough (CW)

This method was applied in 2 studies (5%), emphasizing users’ cognitive processes when performing specific tasks. CW is particularly effective for evaluating complex workflows and task-driven interactions in HIS usability assessments [51, 63].

Combination of multiple methods

Three studies (7%) employed a combination of two or more usability evaluation methods to ensure a more holistic analysis of system usability. This multi-method approach allows for a more comprehensive assessment of different usability dimensions, strengthening the reliability of findings [26, 29, 55].

The selection of evaluation methods in the reviewed studies appears to be influenced by the specific goals of each subsystem’s usability assessment. For instance, heuristic evaluation was typically applied in early-stage design or redesign processes, especially for subsystems with static interfaces such as RIS and PACS. In contrast, user testing was preferred for dynamic systems like CPOE and ADT, where real-time task execution and workflow integration are critical. Similarly, think-aloud protocols were often used to explore user decision-making in high-risk environments such as CDSS and Emergency Information Systems. This methodological alignment indicates an intentional strategy to tailor usability evaluations to subsystem complexity, user roles, and clinical risk levels.

Usability evaluation criteria for HISs

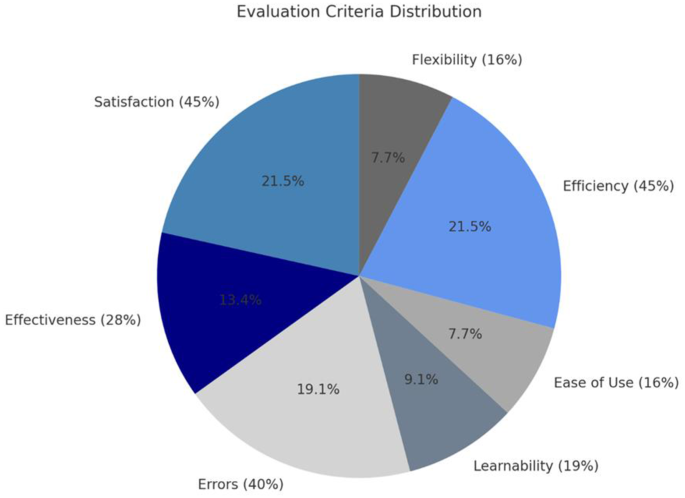

This study identified several key criteria used to evaluate the usability of Hospital Information Systems (HIS). Among these, user satisfaction and system efficiency received the highest attention, with 19 studies (45%) emphasizing their significance. This finding highlights the importance of positive user experience and optimal system performance in HIS usability.

User errors were examined in 17 studies (40%), reflecting a strong focus on minimizing human errors and enhancing system accuracy. Additionally, system effectiveness was analyzed in 12 studies (28%), underscoring the ability of HIS to deliver precise and meaningful outcomes that support clinical and administrative workflows.

Other usability dimensions received comparatively less attention. Learnability, assessed in 8 studies (19%), focused on user training and ease of adoption, while ease of use, discussed in 7 studies (16%), examined how intuitive and user-friendly HIS interfaces are. Flexibility, also explored in 7 studies (16%), emphasized the system’s adaptability to evolving user needs and clinical requirements.

These findings highlight a predominant focus on user-centric criteria in HIS usability research while also underscoring the need for greater emphasis on system adaptability and ease of learning. Figure 5 illustrates the percentage distribution of usability evaluation criteria across the reviewed studies.

Percentage distribution of usability evaluation criteria

link